Picture this: You're a QA engineer working late on a Friday evening, manually clicking through the same 200 test cases you've executed countless times before. Sound familiar? If you've been in the software testing world for more than five minutes, you've probably lived this nightmare.

The reality is that manual testing, while essential, simply can't keep pace with today's software development demands. Companies are releasing updates weekly, sometimes daily, and expecting the same level of quality that used to take months to achieve. According to the DORA State of DevOps Report, high-performing teams deploy code 200 times more frequently than low performers, yet maintain significantly better quality metrics.

Key Market Statistics:

- The global automation testing market is projected to reach $59.91 billion by 2029, growing at a CAGR of 19.6% according to Research and Markets

- Organizations report 40% faster release cycles with comprehensive test automation per LoadFocus research

- 65% fewer production defects when using automated testing strategies

- 97% of companies have accelerated automation technology adoption post-pandemic according to the World Economic Forum

This is where automation QA becomes not just helpful, but absolutely critical for survival in modern software development.

1. What Actually Is Automation QA?

Let's cut through the marketing jargon. Automation QA is essentially getting computers to do the repetitive testing work that would otherwise drive human testers to insanity. Instead of manually clicking through login flows for the thousandth time, you write scripts that can do it automatically while you focus on more interesting problems.

Key Benefits of Automation QA:

- Speed: Tests execute in minutes rather than hours or days

- Consistency: Automated tests perform identical steps every time

- Scalability: Tests can run in parallel across multiple environments

- Cost-Effectiveness: Reduced long-term testing costs despite initial investment

- 24/7 Testing: Continuous execution without human intervention

But here's what most guides won't tell you: automation isn't just about replacing manual work. It's about fundamentally changing how you think about quality in your software delivery pipeline. When done right, automation QA becomes the backbone that allows teams to move fast without breaking things.

The numbers speak for themselves. Organizations using comprehensive test automation report 40% faster release cycles and 65% fewer production defects, according to recent Grand View Research. But the real game-changer isn't speed – it's confidence. When you have solid automated tests running continuously, you can deploy on Friday afternoon without losing sleep.

2. Why Manual Testing Alone Doesn't Cut It Anymore

Let me share a story that perfectly illustrates the problem. A mid-sized SaaS company I worked with had a mobile app that supported 15 different phone models, 3 operating systems, and 5 major feature sets. Their manual testing process required 40 hours per release cycle just for regression testing.

The Hidden Costs of Manual Testing:

- Time Intensive: 2,000+ hours annually for basic regression testing

- Human Error: Fatigue leads to missed bugs and skipped steps

- Limited Coverage: Resource constraints restrict comprehensive testing

- Scalability Issues: Can't keep pace with rapid development cycles

- Burnout Risk: Repetitive work damages team morale

With weekly releases, they were spending 2,000 hours per year just making sure existing features still worked. That's one full-time person doing nothing but repetitive testing. And this doesn't even count the time spent on new feature testing or the inevitable bugs that slipped through due to human fatigue.

The breaking point came when a critical payment bug made it to production because the tester accidentally skipped a step during their 6-hour testing marathon. That single incident cost them more than implementing automation would have cost for the entire year.

This scenario plays out in companies everywhere. Manual testing creates bottlenecks that slow down releases, burns out teams, and ironically, often results in lower quality due to human error and time constraints.

3. The Real-World Impact of Automation QA

When Netflix migrated to microservices architecture, they didn't just add a few automated tests – they rebuilt their entire quality strategy around automation. Today, they run millions of automated tests daily across their platform. The result? They can deploy thousands of times per day while maintaining 99.99% uptime for a service used by over 200 million subscribers worldwide.

Netflix's Automation Success Metrics:

- Millions of automated tests run daily

- Thousands of deployments per day

- 99.99% uptime maintained

- 200+ million users served reliably

But you don't need to be Netflix to see dramatic results. Small teams implementing even basic automation report significant improvements. A startup I consulted for reduced their QA cycle from 3 days to 3 hours by automating their core user journeys. This allowed them to ship features 10x faster during their critical growth phase.

4. The Automation QA Trends Actually Worth Your Attention in 2025

Let's be honest – most "trend" articles are just buzzword bingo. But some developments in automation QA are genuinely changing how successful teams work. Here are the ones that are making a real difference in the trenches.

4.1. AI-Powered Testing That Actually Works

Remember when everyone said AI would replace testers? That was nonsense, but AI is definitely changing the game in more practical ways. The most exciting development isn't robots writing all your tests – it's AI helping with the grunt work that nobody wants to do.

Key AI Applications in Testing:

- Automated Test Generation: AI analyzes code and user behavior to create test cases

- Self-Healing Tests: Machine learning adapts tests when UI elements change

- Predictive Analytics: AI predicts potential failure points based on historical data

- Visual Testing: Computer vision validates UI elements and layouts

- Smart Test Selection: AI prioritizes tests most likely to find bugs

Take test maintenance, for example. Anyone who's worked with automated tests knows the pain: developers change a CSS class, and suddenly 50 tests break. Traditional solutions involved manually updating selectors or writing complex xpath expressions that break when someone sneezes.

Now we're seeing tools like Testim and Mabl that use machine learning to automatically adapt when UI elements change. These platforms create multiple identification strategies for each element. When one fails, it automatically tries others. One team I worked with saw their test maintenance time drop from 8 hours per week to less than 2 hours per month.

AI Testing Market Growth:

- The AI in software testing market is expected to reach $1.5 billion by 2025 according to Grand View Research

- 78% of testers already use or plan to use AI in their workflows per LoadFocus statistics

- 60% reduction in test maintenance effort with AI-powered tools

The real game-changer is AI-assisted test generation. Instead of staring at a blank screen wondering what to test, you can feed an AI tool your user stories and get back a set of realistic test scenarios. It's not perfect, but it's a hell of a lot better starting point than writing everything from scratch.

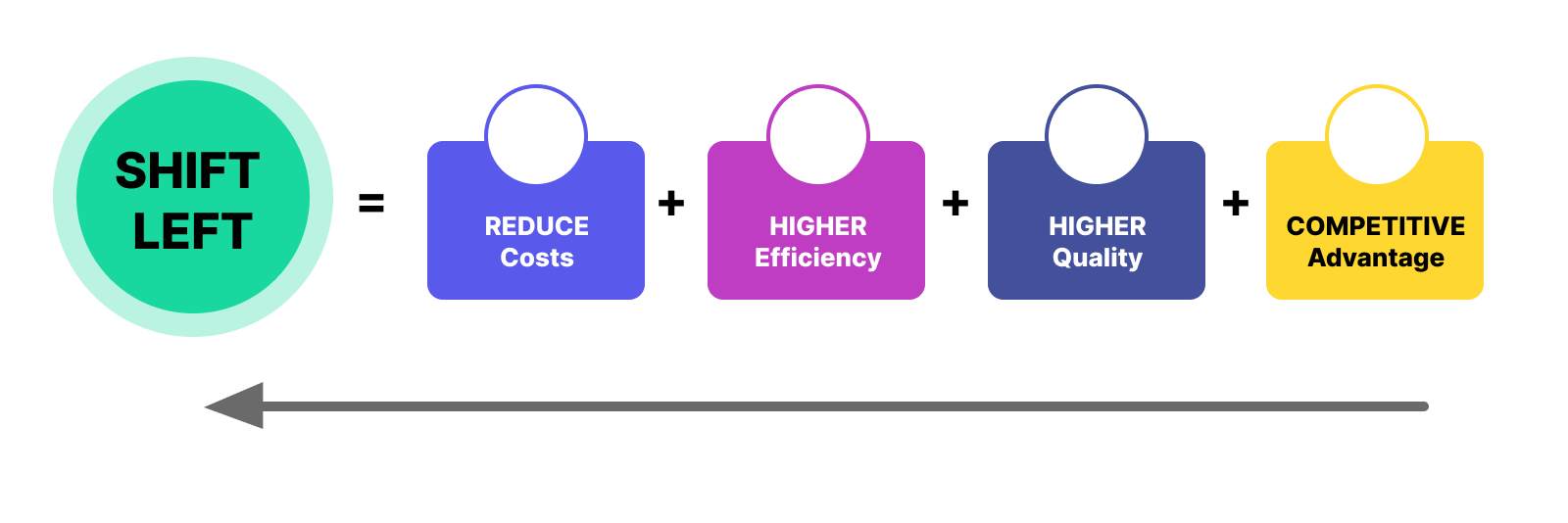

4.2. The Shift-Left Movement Gets Real

"Shift-left testing" used to be one of those consultant buzzwords that sounded smart but didn't mean much in practice. That's changing. Companies are finally figuring out how to actually implement it in ways that don't just create more work for developers.

Shift-Left Testing Benefits:

- 85% reduction in post-production bug costs according to IBM research

- 50% faster release cycles with early testing integration

- Early defect detection saves 15x more than fixing in production per NIST studies

- Improved collaboration between developers and testers

The key insight is that shift-left isn't about making developers responsible for all testing. It's about catching the obvious stuff early so QA teams can focus on the complex, interesting problems.

GitHub's approach is a perfect example. They run lightweight smoke tests on every pull request that catch basic functionality breaks in under 2 minutes. This prevents broken code from even making it to the QA environment, which means QA engineers spend time finding real bugs instead of obvious regressions.

Effective Shift-Left Strategies:

- Unit Testing: Developers write tests alongside code

- API Testing: Validate service contracts before UI development

- Static Code Analysis: Identify vulnerabilities during development

- Continuous Testing: Automated tests trigger with every code commit

The companies doing this well report that QA teams are actually happier, not more stressed. When you're not constantly fighting fires caused by basic issues, you have time to do proper exploratory testing and think about edge cases.

4.3. Self-Healing Tests: Overhyped or Game-Changer?

Self-healing tests are probably the most overhyped trend in automation right now, but there's some substance behind the marketing noise. The promise is simple: tests that automatically fix themselves when the application changes.

Self-Healing Test Capabilities:

- Element Re-identification: Automatically find moved UI elements

- Automatic Retry Logic: Handle temporary failures gracefully

- Dynamic Selectors: Adapt to CSS/ID changes in real-time

- Test Script Updates: Modify test flows based on application changes

The reality is more nuanced. Current self-healing capabilities work well for simple UI changes like CSS class modifications or element relocations. But they fall apart when dealing with actual functional changes or complex interactions.

That said, even limited self-healing can be valuable. One e-commerce company I worked with reduced their test maintenance overhead by 60% using self-healing capabilities for their product catalog tests. When product managers changed category names or moved elements around, the tests adapted automatically instead of breaking.

Popular Self-Healing Tools:

- Sauce Labs - Advanced element identification

- TestComplete - Smart object recognition

- Katalon Studio - Self-healing execution

The key is setting realistic expectations. Self-healing isn't magic – it's just smarter element identification and automatic retry logic. Used wisely, it can eliminate a lot of busywork.

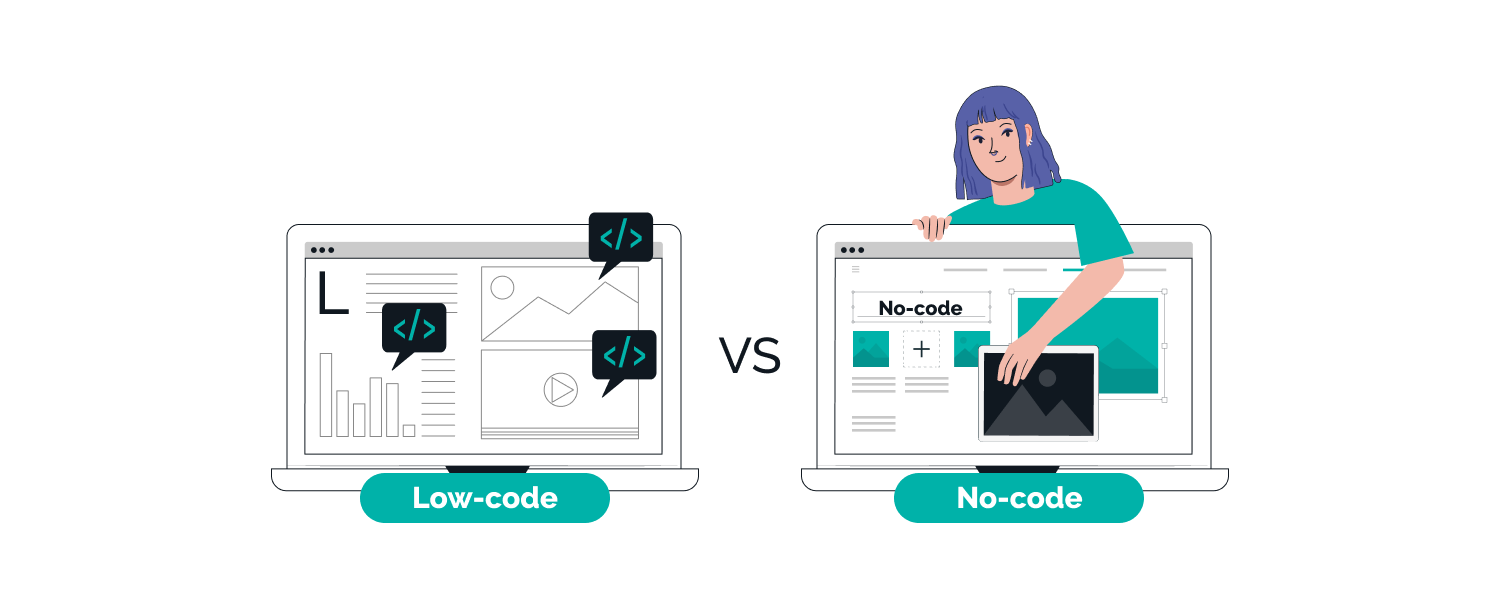

4.4. The Low-Code Testing Revolution

Here's a trend that's actually living up to the hype: low-code and no-code testing platforms. I was skeptical at first, but I've seen teams achieve remarkable results with tools that democratize test automation.

Benefits of Low-Code Testing:

- 40% faster test creation compared to traditional scripting

- Reduced dependency on technical resources

- 70% of test cases can be automated by non-technical team members

- Lower barrier to automation adoption across organizations

The breakthrough isn't just the drag-and-drop interfaces (though those help). It's that these tools are finally good enough to handle real-world complexity while being simple enough for non-programmers to use effectively.

Leading Low-Code Testing Platforms:

- Katalon Studio - Comprehensive test automation platform

- TestComplete - Desktop, web, and mobile testing

- Ranorex Studio - Codeless automation with script access

- Tricentis Tosca - Model-based test automation

A marketing team at a SaaS company I worked with started creating their own automated tests for campaign landing pages using a no-code tool. They went from waiting 2-3 days for QA availability to running tests within hours of deploying changes. This didn't replace the QA team – it freed them up to work on more complex integration and performance testing.

4.5. Cloud Testing: Finally Worth the Migration

Cloud-based testing used to be synonymous with flaky tests and unreliable infrastructure. That's changed dramatically. Modern cloud testing platforms like BrowserStack and Sauce Labs now offer stability that rivals on-premise setups, with the added benefit of massive scale.

Cloud Testing Market Growth:

- 65% of companies now use cloud-based testing solutions according to LoadFocus research

- $200,000 annual savings for enterprises moving from on-premise labs

- 30 minutes vs 2 days for cross-browser testing completion with cloud platforms

- 99.9% uptime reliability for leading cloud platforms like AWS and BrowserStack

The real advantage isn't just the infrastructure – it's the speed of iteration. Instead of waiting for the local device lab to be available, teams can spin up tests across dozens of browser and device combinations instantly. A fintech company I worked with reduced their cross-browser testing time from 2 days to 30 minutes by moving to cloud infrastructure.

Top Cloud Testing Platforms:

- AWS Device Farm - Mobile app testing on real devices

- BrowserStack - Cross-browser and mobile testing

- Sauce Labs - Comprehensive cloud testing platform

- LambdaTest - Cross-browser testing platform

The economics are compelling too. One large enterprise calculated they were spending $200,000 annually on device labs and infrastructure maintenance. Moving to cloud testing reduced that to $60,000 while actually improving their testing coverage.

4.6. API Testing Takes Center Stage

With microservices architecture becoming the default for new applications, API testing has evolved from a nice-to-have to absolutely critical. The challenge isn't just testing individual APIs – it's testing the complex interactions between dozens of services.

API Testing Growth Statistics:

- 70% of organizations prioritize API testing in their automation strategy

- 5 minutes average test time vs 45 minutes for full integration tests

- 99% success rate with contract testing vs 70% with traditional methods

- 60% faster feedback loops with API-first testing approaches

Contract testing has emerged as the solution to this problem. Instead of trying to test every possible integration in a fully integrated environment, teams are using tools like Pact to test the contracts between services independently. This catches integration issues early while keeping tests fast and reliable.

Leading API Testing Tools:

- Postman - Comprehensive API development platform

- REST Assured - Java-based API testing library

- Karate - Open-source API testing framework

- Insomnia - API design and testing platform

- Pact - Contract testing framework

One e-commerce platform I worked with had integration tests that took 45 minutes to run and failed 30% of the time due to environmental issues. By implementing contract testing, they reduced test time to 5 minutes with a 99% success rate. The secret was testing the interface contracts rather than trying to orchestrate complex end-to-end scenarios.

4.7. Security Testing Gets Automated

Security testing used to be something that happened "later" – usually right before release when it was too expensive to fix anything significant. That's changing as security tools get better at integrating into development workflows.

Security Testing Automation Benefits:

- Real-time vulnerability detection during development

- 90% reduction in false positives with ML-powered tools

- Integration with CI/CD pipelines for continuous security

- Immediate feedback instead of waiting weeks for security reports

The breakthrough has been tools like Snyk and Checkmarx that can scan for vulnerabilities in real-time as developers write code. Instead of getting a 500-page security report three weeks before launch, teams now get immediate feedback about security issues as they introduce them.

Top Security Testing Tools:

- OWASP ZAP - Open-source security testing proxy

- Burp Suite - Web application security testing

- Veracode - Application security platform

- SonarQube - Code quality and security analysis

Static Application Security Testing (SAST) tools have also gotten much smarter about reducing false positives. Early SAST tools would flag thousands of potential issues, most of which weren't actually exploitable. Modern tools use machine learning to focus on real vulnerabilities, making security testing actually actionable rather than just noise.

5. Tools That Actually Matter in 2025

Let's talk about the tools that teams are actually using to get work done, not just the ones with the biggest marketing budgets.

5.1. The Selenium Ecosystem Matures

Selenium is still the backbone of most web automation, but the ecosystem around it has evolved significantly. The biggest improvement is Selenium Grid 4, which finally makes distributed testing reliable and easy to set up.

Selenium Grid 4 Improvements:

- Better reliability for distributed testing

- Enhanced debugging capabilities

- Improved performance and stability

- Cloud integration support

- Container-friendly architecture

But the real innovation is happening in the layers above Selenium. Tools like WebDriverIO and Nightwatch have made Selenium much more approachable for teams that don't want to become Selenium experts. These frameworks handle the complexity while giving you the power and flexibility of Selenium under the hood.

5.2. Playwright Gains Serious Traction

Microsoft's Playwright has quickly become the choice for teams starting new automation projects. The killer features are built-in waiting strategies and multi-browser support that actually works reliably.

Playwright Key Advantages:

- Multi-browser support: Chrome, Firefox, Safari, Edge

- Built-in waiting strategies reduce flaky tests

- Modern web app support out of the box

- Auto-wait for elements to be actionable

- Network interception and API testing capabilities

What sets Playwright apart is that it was designed with modern web applications in mind. Single-page applications, complex JavaScript frameworks, and dynamic content that used to break Selenium tests work smoothly with Playwright out of the box.

Playwright vs Selenium Comparison:

| Feature | Playwright | Selenium |

|---|---|---|

| Setup Complexity | Simple | Complex |

| Multi-browser | Native | Requires configuration |

| Auto-waiting | Built-in | Manual implementation |

| Mobile Testing | Limited | Third-party tools |

| Community | Growing | Mature |

5.3. Cypress: Great for the Right Use Cases

Cypress continues to be popular for teams focused primarily on web applications. The developer experience is excellent, and the real-time test runner makes debugging much easier than traditional tools.

Cypress Strengths:

- Excellent developer experience with real-time debugging

- Time-travel debugging for test failures

- Automatic screenshots and videos for failed tests

- No WebDriver dependency

- Fast test execution for supported browsers

The limitation is still mobile and cross-browser support, but for teams building primarily for Chrome and Firefox, Cypress offers one of the smoothest automation experiences available.

5.4. API Testing Tools Consolidate

The API testing space has consolidated around a few key players. Postman has evolved from a simple API client to a comprehensive testing platform. For teams that need more programmatic control, REST Assured (for Java) and requests (for Python) remain the go-to choices.

Leading API Testing Solutions:

- Postman - Visual interface with collaboration features

- Insomnia - Clean, developer-focused API client

- REST Assured - Java library for API testing

- Karate - BDD-style API testing framework

The trend is toward tools that can handle both manual exploration and automated testing in the same platform. This reduces the friction between ad-hoc testing and formal automation.

6. How to Actually Implement Automation QA (Without Losing Your Mind)

Here's where most automation QA guides fall apart – they tell you what to do but not how to actually do it in the real world, with real constraints, real legacy code, and real stakeholders breathing down your neck.

6.1. Start Small, Think Big

The biggest mistake teams make is trying to automate everything at once. I've seen organizations spend six months building elaborate automation frameworks that nobody uses because they're too complex and brittle.

Smart Automation Starting Points:

- Single critical user flow (e.g., login/registration)

- Core revenue-generating features

- Most time-consuming manual tests

- Highest-risk functionality

Instead, start with your most painful manual testing scenario. For most teams, this is regression testing of core user flows. Pick one critical path through your application – like user registration and login – and automate just that. Get it working reliably in your CI pipeline before you move on to anything else.

Implementation Timeline:

- Week 1-2: Identify and automate single flow

- Week 3-4: Integrate with CI/CD pipeline

- Month 2: Add 2-3 additional critical paths

- Month 3-6: Expand systematically based on ROI

A B2B SaaS company I worked with started by automating just their login flow. It took two weeks and saved them 30 minutes per release. Not earth-shattering, but it proved the concept and got buy-in for expanding automation.

6.2. The 70-20-10 Rule

Here's a framework that actually works for deciding what to automate:

70% - API and Integration Tests

- Fast execution (seconds vs minutes)

- Reliable and stable

- Catch business logic errors

- Easy to maintain

- High ROI

20% - Critical UI Paths

- Revenue-generating flows

- Core user workflows

- High-impact user journeys

- Customer-facing features

10% - Edge Cases and Experiments

- New tool evaluation

- Complex scenarios

- Nice-to-have features

- Learning opportunities

70% of your automation effort should go to API and integration tests. These tests are fast, reliable, and catch the bugs that actually matter to users. They're also easier to maintain because APIs change less frequently than UIs.

20% should go to critical UI paths. Focus on the flows that generate revenue or would cause major user pain if broken. For an e-commerce site, this might be checkout. For a SaaS app, it might be the core workflow that users perform daily.

10% can go to edge cases and experimental automation. This is where you try new tools and techniques without risking your core test suite.

Test Data: The Thing Nobody Talks About

Most automation projects fail because of test data problems, not technical issues. Your tests need consistent, reliable data to work with, and production data is usually a terrible choice for automation.

The solution is synthetic test data that's designed specifically for automation. Create data that represents real scenarios but is predictable and renewable. One fintech company I worked with reduced their test flake rate from 40% to under 5% simply by fixing their test data strategy.

Set up data creation and cleanup as part of your test infrastructure. Tests should create the data they need and clean up after themselves. This makes tests independent and removes the mystery of why tests pass on your machine but fail in CI.

Making CI/CD Integration Actually Work

Everyone talks about integrating tests into CI/CD pipelines, but most teams do it wrong. The key is layering your tests based on speed and reliability.

Fast tests (under 5 minutes) run on every commit. These are mostly unit tests and critical API tests. They catch obvious regressions quickly without slowing down development.

Medium tests (5-20 minutes) run on pull requests and before deployment. These include broader integration tests and core UI automation.

Slow tests (20+ minutes) run nightly or on release candidates. These are comprehensive end-to-end tests and performance tests.

This layered approach means developers get fast feedback for most changes while still maintaining comprehensive test coverage.

Handling Test Failures Like a Pro

Here's what separates successful automation from failed experiments: how you handle test failures. Flaky tests will kill your automation program faster than any technical limitation.

When a test fails, you need to know immediately whether it's a real bug or a test issue. Implement good logging and screenshot capture so you can debug failures without having to reproduce them locally.

More importantly, establish a "no flaky tests" policy. If a test fails intermittently, either fix it or delete it. Flaky tests train developers to ignore test failures, which defeats the entire purpose of automation.

7. Common Pitfalls and How to Avoid Them

7.1. The Over-Engineering Trap

I've seen teams spend months building elaborate frameworks with configuration files, abstraction layers, and custom DSLs that nobody else on the team understands. This always ends badly.

Signs of Over-Engineering:

- Custom frameworks that require extensive documentation

- Multiple abstraction layers that hide simple operations

- Configuration files with dozens of parameters

- Tests that require framework expertise to modify

Simple Automation Principles:

- Keep it readable: Another developer should understand your tests

- Prefer composition over inheritance

- Favor explicit code over clever abstractions

- Write tests like production code with proper structure

Keep your automation simple and readable. Another developer should be able to understand and modify your tests without a PhD in your custom framework. Prefer composition over inheritance, favor explicit code over clever abstractions.

7.2. The UI Automation Obsession

Teams often start with UI automation because it feels the most like manual testing. This is backwards. UI tests are slow, brittle, and expensive to maintain. They should be the last layer of your automation strategy, not the first.

The Testing Pyramid (Bottom to Top):

- Unit Tests (70%) - Fast, reliable, isolated

- Integration/API Tests (20%) - Business logic validation

- UI Tests (10%) - Critical user journeys only

Start with unit tests, then API tests, then a few critical UI tests. The testing pyramid exists for good reasons – follow it.

7.3. Ignoring Mobile Until It's Too Late

If your application has mobile users (and most do), plan for mobile automation from the beginning. Adding mobile testing later is much more expensive than designing for it upfront.

Mobile Testing Options:

- Appium - Cross-platform mobile automation

- Espresso - Android native testing

- XCUITest - iOS native testing

- Detox - React Native testing framework

The mobile automation landscape has stabilized around Appium for cross-platform testing and native frameworks (Espresso for Android, XCUITest for iOS) for platform-specific needs. Choose based on your team's skills and how much platform-specific testing you need.

8. Measuring What Actually Matters

Most teams track the wrong metrics when it comes to automation QA. They focus on vanity metrics like test coverage percentages instead of the things that actually impact business outcomes.

The Metrics That Matter

Time to Feedback: How quickly do developers learn about issues after committing code? This should be measured in minutes, not hours. If your automated tests take 45 minutes to run, they're not providing useful feedback for developers.

Confidence Index: This is subjective but crucial. How confident is your team about deploying to production? Track this through surveys or by measuring how often deployments get delayed due to quality concerns.

Defect Escape Rate: What percentage of bugs make it to production despite your testing? This is the ultimate measure of your testing effectiveness. A good automation strategy should significantly reduce this number.

Test Maintenance Burden: How much time does your team spend fixing broken tests versus finding real bugs? If you're spending more time maintaining tests than finding issues, your automation strategy needs work.

ROI: Making the Business Case

Here's a simple framework for calculating automation ROI that actually works:

Calculate your current manual testing cost. Include not just QA time, but developer time spent waiting for testing, deployment delays, and production incident response.

For one medium-sized SaaS company, this calculation revealed they were spending $400,000 annually on manual regression testing when you included all the hidden costs. Their automation investment of $150,000 (tools, training, and initial development) paid for itself in less than six months.

9. What's Actually Coming Next

Let's be realistic about the future. Most of the "revolutionary" trends you read about are years away from practical implementation. Here's what you can actually expect to see in the next 2-3 years:

9.1. AI Gets More Practical

AI won't write all your tests, but it will get much better at the mundane tasks. Expect significant improvements in test maintenance automation and much smarter test failure analysis. Tools that can automatically categorize test failures and suggest fixes will become standard.

Near-Term AI Improvements:

- Smarter test failure analysis with root cause identification

- Automated test maintenance that actually works reliably

- Test case generation from requirements and user behavior

- Predictive testing that focuses on high-risk areas

9.2. Security Testing Integration

Security testing will become as automated and integrated as functional testing is today. This isn't just about running security scans – it's about building security validation into every part of your testing strategy.

Security Testing Evolution:

- Shift-left security integrated into development workflows

- Real-time vulnerability detection during coding

- Automated compliance checking against security standards

- AI-powered threat modeling and risk assessment

9.3. Performance Testing Democratization

Performance testing tools will become as easy to use as functional testing tools are today. Expect to see performance validation built into standard CI/CD pipelines rather than being a specialized activity.

Performance Testing Trends:

- No-code performance testing for non-specialists

- Continuous performance monitoring in production

- AI-driven load pattern generation and optimization

- Real user monitoring integration with synthetic testing

10. Getting Started: A Practical Roadmap

10.1. Week 1-2: Assessment

Don't start by picking tools. Start by understanding your current pain points. What manual testing takes the most time? What bugs are escaping to production? Where are your biggest quality risks?

Assessment Checklist:

- ✅ Map current manual testing processes and time spent

- ✅ Identify most time-consuming test scenarios

- ✅ Document recurring bugs and their impact

- ✅ Survey developers, PMs, and support team for pain points

- ✅ Calculate current cost of manual testing and bug fixes

Talk to your developers, product managers, and customer support team. They'll tell you where automation could have the biggest impact.

10.2. Week 3-4: Pilot Planning

Pick one specific scenario to automate. Make it something that's currently painful and time-consuming, but not so complex that it will take months to implement.

Good First Automation Targets:

- ✅ Login/authentication flow

- ✅ Critical API endpoints

- ✅ Basic smoke tests for key features

- ✅ User registration process

- ✅ Core business workflows

Avoid Starting With:

- ❌ Complex user workflows

- ❌ Third-party integrations

- ❌ Edge cases or error scenarios

- ❌ Visual/UI-heavy tests

- ❌ Anything requiring external dependencies

10.3. Month 2: Implementation

Build your first automated test. Keep it simple. The goal is to learn, not to create the perfect test. You'll throw away most of this initial work anyway as you figure out what works for your team and application.

Implementation Focus:

- Start with API tests for faster, more reliable feedback

- Use existing tools rather than building custom frameworks

- Document everything you learn during the process

- Measure success with concrete metrics (time saved, bugs caught)

10.4. Month 3-6: Expansion

Based on what you learned from your pilot, expand gradually. Add more tests to your existing framework before adding new frameworks or tools.

Expansion Strategy:

- Add 2-3 new test scenarios per sprint

- Focus on high-value, low-maintenance tests

- Build test data management early

- Integrate with CI/CD pipeline

- Train team members on automation practices

Focus on building a foundation that's reliable and maintainable. It's better to have 20 rock-solid tests than 200 flaky ones.

10.5. Month 6+: Optimization

Now you can start looking at advanced features like parallel execution, cloud infrastructure, and AI-assisted testing. But only after you have a solid foundation in place.

Advanced Optimization:

- Parallel test execution for faster feedback

- Cloud-based testing for better scalability

- AI-assisted test maintenance for reduced overhead

- Performance testing integration

- Security testing automation

11. The Bottom Line: Your Automation QA Journey Starts Now

Automation QA isn't about replacing human testers with robots. It's about freeing human testers from repetitive work so they can focus on the interesting problems that actually require human insight.

Key Takeaways for 2025

✅ Do These Things:

- Start small with your most painful manual testing scenario

- Focus on API testing first for maximum ROI and reliability

- Build simple, readable tests that anyone can understand and maintain

- Measure real business impact (time saved, bugs prevented, confidence gained)

- Integrate with CI/CD for continuous feedback and early bug detection

- Invest in team training and knowledge sharing across the organization

❌ Avoid These Pitfalls:

- Don't try to automate everything at once - it leads to failure

- Don't build elaborate frameworks that require PhD-level expertise

- Don't chase perfect test coverage - focus on high-value scenarios

- Don't ignore mobile testing until it's too late to add cost-effectively

- Don't tolerate flaky tests - they destroy trust in automation

The Future is Bright

The teams that succeed with automation QA are the ones that start small, focus on practical value, and build sustainable practices rather than chasing the latest trends.

Market Reality Check:

- The automation testing market will reach $59.91 billion by 2029 per Research and Markets

- 78% of organizations are already using or planning AI-driven testing according to LoadFocus statistics

- Companies report 400% ROI within the first year of automation per Grand View Research

- High-performing teams deploy 200x more frequently with better quality according to the DORA State of DevOps Report

Your Next Steps

Most importantly, remember that automation QA is a means to an end, not an end in itself. The goal is shipping better software faster, not having the most sophisticated testing infrastructure.

Action Items for This Week:

- Assess your current manual testing pain points

- Identify one critical user flow to automate first

- Research the right tools for your tech stack and team skills

- Calculate the potential ROI and present the business case

- Start small with a pilot project to prove value

Recommended Resources:

- Test Automation Pyramid by Martin Fowler - industry standard framework

- Google's Testing Blog for cutting-edge testing practices

- Ministry of Testing for global testing community insights

- LoadFocus Testing Statistics for latest market data

- Research and Markets Reports for comprehensive market analysis

- DORA State of DevOps Report for performance benchmarks

Start where you are, use what you have, do what you can. The perfect automation strategy is the one that actually gets implemented and provides value to your team and your users.

Ready to transform your testing strategy? The tools, techniques, and trends outlined in this guide are already helping thousands of teams deliver better software faster. Your automation journey starts with a single test – make it count.